Handling Enterprise Web Application Sessions

The basics of web session handling

Web server session is a well-known mechanism used in web applications to handle state in connections for an environment that is by very nature stateless. At its base, the HTTP protocol has no notion of state, is just a request-response protocol. Unfortunately the stateless protocol has some issues to cope with certain type of applications that need persistent information over a number of request-response cycles. A shopping cart is an example of the necessity to save some temporary information over a number of request-response cycles until the check-out is finalized.

Over the years, there were many attempts to improve the communication environment with state information: cookies, information saved in the delivered page and posted-back with the form (a mechanism perfected by Microsoft ASP .Net) and of course the web session.

Web session has many mode of implementations but all have in common that some data is saved on the server and there is a mean of identifying the data between requests. By using this identifier the data can be retrieved with the next requests. Of course the identifier still needs to be persisted between the requests as is described later.

Mechanisms for saving data on the server

- Data saved in files

This is a simple way to persists data on the server, the data is serialized in simple files and the name is used to identify it. This mechanism is often used with the PHP framework. The mechanism is very simple to implement and use but is not safe enough for some uses.

- Data saved in the same process as the server component

The server component that is processing requests has usually a long living time on the server and can hold the data between requests in memory. Of course a crash of the component will cause the loose of all saved data.

- Data saved in a dedicated process on the server

This is a variation of the previous method where data is saved in a dedicated process, specially build to handle session data and thus more robust and more unlikely to crash. This does not come for free as it incur some performance penalty because of the need to send data to another process. The data is still lost if the server crashes or needs a restart.

- Data saved on a separate server

The data is still saved in memory but this time on a separate server or farm of servers. The cost of transferring data over network can be pretty high but the reliability might be more important.

- Data saved in a database

This method avoid loosing the data but again some performance must be traded in. The performance cost of saving the data to the database might be high but the permanent storage and transactionality might deserve the trade off.

Most current web frameworks including J2EE and .NET Framework have implementations of all these mechanisms.

Mechanisms for identifying the data between requests

- Using a cookie

A short identifier that uniquely identify the data on the server or more usually the entire session is saved as a session cookie. The cookie is persisted on client side and sent with each requests to the server usually until the client application that handles the series of requests is closed.

- Using the URL

The identifier is directly written in the URL of the page. The URL is parsed on the server and the extracted identifier is extracted to identify the data or the entire session.

- Using a hidden field

The identifier is written in a hidden HTML form field and sent back to the server when a new request is made.

Most current frameworks support all these mechanisms. The cookie is usually the default mechanism but in some cases the other mechanisms are used as a fall back method.

Handling the web session in a more powerful way

All the mechanism covered above are used to simply save some data over a series of requests. The data can be anything and the frameworks do not care of its meaning. The framework that implements the web session mechanism just saves a bunch of data and return it when requested. A obvious limitation is that the session is, well, just a session, limited in time by the time the client is up and running.

Have you used Amazon to buy something? It is interesting how they handle the shopping card data. Instead of saving this data for the duration of the session (as long as your web browser is opened for example) they persist it indefinitely. This is a shift from the classical way of doing things but a very powerful and significant one. Instead of giving the impression of something temporary they basically tell you: “this is your place, you can do anything you want and we will keep your data exactly as you left it until you come back!”.

But this approach also need a shift from the usual session handling. Because to implement a permanent “session” the application needs to understand the data. Imagine for example what would happen if the shopping cart saved an item with 100$ price tag and after a month and several price reductions the user is coming back and buys the product with the original price: he will not be very happy if he finds out!

The way to handle the permanent “session” is to not treat it like session but as normal application model data. If you are using a relational database to persists the application data you just need to have a couple of more tables to handle the “temporary” data. In the case of the shopping cart example you can have a table with reference to product, the original price and date of the last access. The price can be directly retrieved from the current product price when the shopping cart page is displayed.

But this mechanism can be used not only for fancy web sites but also for normal enterprise class applications. Complex applications usually need a number of steps to enter or modify data. Filling up this data can take quite some time, it can be very frustrating to have to do it at once especially when it happens that the network is temporary unavailable or the damn browser just needed a rest. Imagine you can have a web enterprise “session” mechanism in place and any data that is saved, presumably using several complex forms, is treated as temporary data until the user commit it. The final validation can take place at the last stage when all the data is available and the user has the chance to correct some of first information entered before the last save is performed.

Architecture styles

The following is a brief introduction to architectural styles, not intended as a complete reference but as a quick scan-through. For a more complete analysis you might want to read one of the materials referenced at the end of this post.

Layered architecture

The most simple and certainly most known architecture style of is the layered architecture style. There are many examples of the layered patterns applied to IT industry such as the OSI model consisting of 7 layers describing network architecture. The concept is simple, each layers only interact with the layer below it and delivers services for the layer immediately above. The main advantage of layers is the stratification of concerns that are implemented in a system, making each layer perform a certain area of functions makes the system more simple to understand and easier to maintain. When implemented correctly the upper layers can concentrate on implementing functions needed by its users (eg. business functions) and relay on lower layers to handle the more basic system needs (eg. infrastructure functions). A classical example of a layered architecture is the following:

In this diagram, the user interface layer, responsible for displaying information and handling interaction is using services from the business layer who is responsible with performing actual system functions. The business layer is using services on its own from the data layer who is responsible with storing and retrieving data.

A disadvantage of using this style is the decrease of performance because each call that comes from the upper layer must transit all the underneath layers.

You must balance the advantages and disadvantages of using this style and use it when appropriate. Sometimes, for performance reasons, the calls from an upper layer jumps over an intermediate layer and calls directly a lower layer, to boost the performance.

However this is not good practice and undermines the main advantages of this architectural style: lower complexity and better maintainability.

Pipe and filter architecture

One of the oldest styles of architecture recognized as such is the pipes and filters architecture. This style uses processing components that output same kind of data, named filters, and connections between these components, named pipes. The processing is done by each components using data received through the pipe from the previous component. The filter processing can eventually be done concurrently, as data from a previous filter become available. A very well known system build using this architecture is the Unix command lines but other domains can use this style as well. In the diagram below is an example of an image processing system for noise reduction composed of 3 filters:

The clock stereotype from the image above denotes a real-time component, this enforces the idea that the style can be applied to very different systems. The advantage of this style is that complex systems can be build by combining simple components. Each component receive some data from the its inbound pipe, processes the data and sends the result to the outbound pipe. The components can then be combined in new and ingenious ways reusing much of the existing work. For example, replacing the last filter with a “Noise enhancer” component the result is completely different output. As a drawback the style can only be applied to certain class of systems and the processed data may need to have special characteristics to use the style at its full potential.

Client Server and N-tier architecture

The client server architecture style become very well known when the explosion of internet brought it to common vocabulary. On the internet, a browser (client) responsible with user interaction connects to a web portal (server) responsible with data processing and HTML formatting. There are many examples of client-server architectures and initially it appeared as a result of the need to centralize processing and data to a common location and allow changes of the system to be implemented without modifying the whole system. In a system composed only of desktop clients any modification would presumably require redeployment of all clients, a operation that incurred high costs.

It is not easy to balance the amount of functionality implemented on the server with the ones implemented on client because of the conflicting needs and requirements. For example you would want more processing on client when a fast user interface is desirable but you also want as much processing done on the server so that changes are more easily deployed. The web browser clients, are traditionally thin clients where little or no processing is done except displaying server formatted pages and sending input. But, lately there is a grown tendency to add more processing on the clients, HTML5 being an example of this trend. Another aspect that is hard to balance is the amount of data exchanged between the client and the server. You would want very little data transmitted for performance reasons but however the users might demand a lot of additional information.

N-tier architecture style can be seen as a generalization of the client-server architecture. The style appeared as a result of the need to place a system functionality onto more than one processing node. This style of architecture is supported naturally by the layered architecture and more often than not there is confusion between n-tier and n-layer architectures. As a rule of thumb tier refers to processing nodes while layers refers to the stratification of system functionality. The diagram below is a classical example of a n-tier architecture:

As with layered architecture you need to make a decision regarding the number of tiers balancing the performance, separation of concerns, deployment complexity, hardware etc.

Publisher-subscriber architecture

Publisher-subscriber name come from the well-known newspaper distribution model where the subscribers register themselves to receive copies of newspapers from the publisher. The model works about the same way (well maybe without the payment part) in a software system. Data or messages are distributed to a list of subscribers registered with the publisher. The subscribers can later on un-subscribe and will not receive data or messages in the future.

The diagram below shows a general example of this model:

Database systems are known to implement a publisher-subscriber model for data replication but the model can also be used for exchange of messages. The big advantage of this model is the disconnection of the communication channel from the end points. The subscribers are doing their own bookkeeping by registering and un-registering from the publisher and the former only job is to send data to each subscriber in a list. Dynamic end point management can have many advantages, however, keeping track of the subscribers and the sent data that is pretty involving and adds complexity especially during operation.

Event driven architecture

In event driven architecture the components are completely unaware of the communication end points. Communication is handled by a special component at infrastructure level. This might be seen as an evolution of the publisher-subscriber where instead of registering with a subscriber a component is just receiving all or a filtered set of messages from any component that is using the same infrastructure.

The events are send by a component usually without a determined end point, and any component interested in the type of event can receive it together with the attached data. There are many advantages of this approach like the possibility of adding and removing components without a huge impact on the system, easy component relocation, possibility to extend the messages without impacting the components that are not yet ‘upgraded’ and so on. Of course this does not come for free, using this kind of architecture is not something some can add later but it must be constructed from the beginning. The Enterprise Service Bus off the shelf components try to mimic this model at a higher level and with the promise of interconnecting components that were not specifically constructed to work in a service oriented architecture but with some drawbacks like complexity, tendency for hub-and-spoke architectures and performance issues for high throughput systems.

Other styles

There are many other architecture styles not covered here, such as peer-to-peer, hub-and-spoke, interpreter, etc. For a more comprehensive list check out Software Architecture: Foundations, Theory, and Practice for an introductory list or Pattern-Oriented Software Architecture Volume 1: A System of Patterns for a more comprehensive description.

Comments Off on Architecture styles

Little details

I was registering to Red Hat Virtual Experience forum and was filling the usual “name-address-can we contact you” form when, to my surprise I got a form validation error because the password was not between 4 and 8 characters long. Yes this is right, one of the big enterprise software houses are running an online web site where the maximum password length is 8 characters, a length that is known to be “crackable” with medium levels of ability and resources. I don’t even want to discuss the 4 characters length passwords.

This is one of those little details that can turn the credibility you have in a company with 180 degrees.

The sad thing is that I see this kind of behavior a lot lately and most from ‘big’ names. For example passwords that can only be alpha-numeric in a IBM program site I am part of – it crashed when I included a couple of ‘non-standard’ characters, one of the banks I opened an account recently required fixed 8 numeric characters passwords for its online banking systems and so on. After so many big security leaks that originated with a week password guessed, you would expect this to change. For example December last year, Gawker network was completely hacked and thousands of records with personal data were stolen.

Comments Off on Little details

What is software quality? Depends who is asking

Sometimes the software quality is an elusive subject. Defining quality is complex because a different perspective will, most of the time, give a whole new definition.

Take for example what the user perceives as quality attributes of a system. When thinking about the user perspective, usability is the first to come to mind. Most users just muddle through an application functions without taking time to learn it. If the user doesn’t figure out what to do he will just leave. If the user really needs to do a task, such as at work using a company system, he will be very angry if he cannot find its way through the system functions.

Another one of the first thing a user notices about an application is the performance. The performance is a show stopper if is not enough. On the other hand, trying to have extreme performance is not needed most of the time and can have a negative impact on other quality attributes. The availability of the system is also an important aspect from the user perspective. A system must be there when needed or is useless.

Security is also perceived as fundamental from the user point of view, especially in the recent years more and more emphasis is put on securing personal data.

On the other hand if looking from the developer perspective quality of a system looks a bit different. The developer will (or should ) think about maintainability as the system will more likely change in one form or the other as a result of new functions or corrections of the ones already implemented. Some components of the system might be reused if the proper thinking is applied and reusability can have a big impact on future implementations.

One of the latest trends in software development has been to use tests from the inception phases of the project. Techniques such as test driven development are applied with great success for a large class of problems. But to be effective the testability of the system must be build into the product from the begging not as an after thought.

From the business perspective the cost of building the system or the time to marked can be very important as these can decide if it is build at all. The projected life time of a system can be also important because a system needs to be operated and maintained, maybe for several years, and this will incur some king of operating costs. A new system will most likely not exists in isolation and certainly will not change over night what a company does, so integration with legacy systems might be an important aspect. Other important aspects for the business can also be thinks like roll-out time or robustness.

So, in conclusion, what is software quality? Well, it seems it depends who is asking!

Comments Off on What is software quality? Depends who is asking

Why Performance is Bad for your Software System

Performance is one of the so called non-functional requirements (or quality attributes) of a system and you can find it in most analysis documents. The fact that the analyst has written it in the analysis document is a good thing, but is it correctly specified most of the time?

When talking about performance there are many terms that are used, not every time in a consistent way. For example one can talk about performance and mean ‘response time’ while other can understand ‘throughput’. Let’s see a list of terms that are associated with performance and their most common meaning (1):

- Response time is the amount of time it takes for the system to completely process a request

- Responsiveness is about how quickly the system acknowledges a request without actually processing it

- Latency is the minimum amount of time to get a response even if there is no processing

- Throughput is how much processing can be done in a fixed amount of time

There are even more terms related to performance like load, load sensitivity and efficiency but these are what people generally understand when talking about performance. It is important to distinguish between these terms when talking about performance because they can have very different impact on the system. For example to improve the responsiveness of the system you most likely need to change the architecture maybe by implementing something like asynchronous processing.

But the system architecture should take into consideration a lot more than just performance. These are the most common quality attributes that need planned for a software system (2):

- Agility is the ability of a system to be both flexible and undergo change rapidly

- Flexibility is the ease with which a system or component can be modified for use in applications or environments other than those for which it was specifically designed

- Interoperability is the ability of two or more systems or components to exchange information and to use the information that has been exchanged

- Maintainability is the ease with which a software system or component can be modified

- Reliability is the ability of the system to keep operating over time

- Reusability is the degree to which a software module or other work product can be used in more than one computing program or software system

- Supportability is the ease with which a software system can be operationally maintained

- Security is a measure of the system’s ability to resist unauthorized attempts at usage and denial of service

- Scalability is the ability to maintain or improve performance while system demand increases

- Testability is the degree to which a system or component facilitates testing

- Usability is the measure of a user’s ability to utilize a system effectively

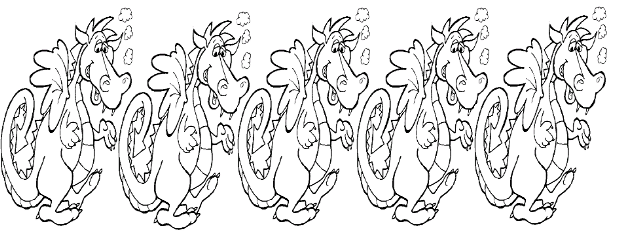

Usually, modifying one of this attributes affects other attributes as well. For example improving agility will most of the time also improve maintainability. But how about performance? How does the performance improvement, such as modifying the system response time, will affect the other attributes?

Let’s take some examples of things that someone can do to improve performance and what are the effects:

- A change from a web service interface to remote method invocation to improve the latency will negatively affect reusability

- A change from an object oriented processing to a stored procedure to improve the response time will negatively affect flexibility and maintainability

- A change from a clean implementation of an algorithm to an optimized one will negatively affect maintainability and testability

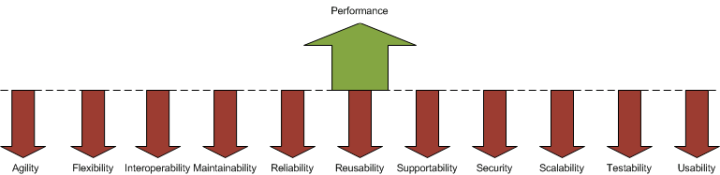

The following diagram illustrates the likely effects of improving the performance of a system to other attributes:

The performance improvement affects negatively every one of the quality attributes described above. So, it is better to carefully analyse the trade-offs of the performance improvement over the other quality attributes. Most of the time ‘enough’ performance is just the right thing to do when thinking in perspective.

— 1. Patterns of Enterprise Application Architecture by Martin Fowler 2. Implementing System Quality AttributesComments Off on Why Performance is Bad for your Software System

Benchmarking – a marketing tool

There has been a recently heated discussion when http://ormbattle.net/ published benchmarks that demonstrates that NHibernate performance is poor compared with other ORM frameworks. At first sight it looks like an valuable tool for assessing different frameworks speeds, but at a deeper analysis and in the light of the comments on Ayanide post «Benchmarks are useless, yes, again» it isn’t as clear anymore:

Trying to compare different products without taking into account their differences is a flawed approach. Moreover, this benchmark is intentionally trying to measure something that NHibernate was never meant to perform. We don’t need to try to optimize those things, because they are meaningless, we have much better ways to resolve things.

Making a benchmark is simple, make a loop and measure things. But this kind of benchmark only shows as much as the speed of runing some meaningless instructions in a non realistic loop. Making a benchmark that handles real world scenarios is a much harder undertaking, one that could possibly show real insight into using a framework or another. But the frameworks are usualy very different and one way to do things in the first framework has no direct correspondence in the other. It will just be a different thing to measure. In the end is all about marketing stuff.

If you want to show something is faster you can certainly make a benchmark to show it as another interesing battle at a whole another level clearly demonstrates. Microsoft published on its site a benchmark report entitled «Benchmarking IBM WebSphere 7 on IBM Power6 and AIX vs. Microsoft .NET on HP BladeSystem and Windows Server 2008» that demonstrates .NET on Windows is much better in terms of speed than WebShepre on AIX. IBM response was to make a benchmark of its own showing the opposite.

The bottom line is that benchmarks should be seen with reserves as most often are just marketing tools.

Comments Off on Benchmarking – a marketing tool

Working hard versus working smart

Working hard on a project doesn’t always give the best productivity, there are actually more times when it doesn’t. Here are some of the approaches I’ve seen on projects:

Working hard

The code like hell approach may work for a small project, but on any project larger than a couple of month it will go wrong. After the initial time the fatigue will prevail and the end will not make anyone on the team happy even if the project eventually delivers it’s product.

Fuzzy start

There are times when the start of a project is not so clear, nobody knows what to do and there is a lot of fuzziness in the work that it is done. When starting a big project it seems that there is so much time that a lot of activities that are not very productive are allowed to take place. But after some time the schedule start to ring a bell and everyone is starting to work hard. In the end everybody is tired and the overall productivity is not very high.

Working smart

\

\

But there is a way, working smart means to work as hard as you can to keep a steady peace forever. The productivity may not be the highest when measured on small periods but overall is higher than with any other approach.

So try to work smart and you will do more than trying to work hard!

Comments Off on Working hard versus working smart

Understanding Liskov Substitution Principle

The Liskov Substitution Principle, in its simplified, object oriented language way, says that:

Derived classes must be substitutable for their base classes.

The principle looks simple and obvious; at first look we are tempted to question that the opposite is true: can one make a class that is not substitutable for their base class? Technically speaking no. If one makes a derived class and the compiler does not complain everything should be fine. Or is it not?

Let’s see a simple example:

public class MusicPlayer {

public virtual void Play(string fileName) {

// reference implementation for playing

}

}

// Optimized mp4 player

public class Mp4MusicPlayer : MusicPlayer {

public override void Play(string fileName) {

if (Path.GetExtension(fileName) != "mp4")

throw new ArgumentException("can only play mp4");

// optimized implementation for playing

}

}

In the above example we have a reference implementation for a music player. The reference implementation would play all types of files but maybe not with the best performance or quality. An optimized player for a certain type of file, for example for a MP4 file type, is as far as the c# interface is concerned a specialized type of music player.

So what’s the problem? The above Mp4MusicPlayer class just violated the Liskov substitution principle. One cannot substitute MusicPlayer with Mp4MusicPlayer bacause the later works only with certain specific music files. Where MusicPlayer would work with a MP3 file, the Mp4MusicPlayer would throw an exception and the program will fail.

Where did this go wrong? As it seems, there is more to an interface that meets the eye. In our case the preconditions of the derived class are stronger than those of the base class and even if in c# the interface does not contain in it’s definition the preconditions you will have to keep them in mind when designing the inheritance tree.

But there is more than that. The above is only one way in which the Liskov substitution principle can be violated. In a more general terms is a problem of abstractions. When one defines a base class or an interface it actually defines an abstraction. And when one makes a derived class implicitly agrees to satisfy the same abstraction.

In the example above the MusicPlayer abstraction is “something that can play any music file”

But Mp4MusicPlayer abstraction is “something that can play MP4 music files”

The abstractions are different, and this is the root cause of the problem. When the abstractions are not appropriate the situation can get nasty.

Liskov substitution principle is all about abstractions

There are multiple ways in which the abstraction can be broken. A wrong abstraction can be hacked in simple cases but eventually it will come back to byte you. We might be able to install an electronic device on the wooden horse in the right of the above image if we need the “horse” abstraction to neigh but we will never persuade it to eat a hand of hay.

Comments Off on Understanding Liskov Substitution Principle

DBF Data Export Library

Recently I’ve written a small library for exporting data to DBF and other format files for an internal application at my current employer. This library is now open source and can be downloaded from codeplex.

The application required data exported for integration with several 3’rd party applications we do not control. These applications require the old DBF file format or CSV format. We also needed export in excel files, this time as a strategic feature. We had a couple of attempts for DBF/CSV files using existing methods but we found several problems.

- JET using Microsoft.Jet.OLEDB was plain unusable because of wrong formatting in some cases or data files not always read by our target applications

- Using OLE DB Provider for Visual FoxPro was a lot better, we didn’t have any problem with it, until we needed to move the application to a 64 bit system. VPFOleDB does not work in a 64bit environment and there are no plans to do so. This was a show stopper for us.

At this point we considered using a plain c# solution without any other dependency. This solution will allow us to fix future problems without totally replacing the underlying technology like we had to do with OLEDB external drivers. We believe this solution will pay its initial development time on the long run.

While there are several other solutions out there for writing DBF/CSV files, none did satisfy our requirements. The libraries we found were either of poorly quality or oriented toward data manipulation for specific file formats. We needed a library for exporting data from an existing data source to several file formats (DBF and CSV mainly, but also others to come). Mashing up existing libraries and trying to adapt them for our requirements looked daunting, so we decided to be better of writing a library form scratch. Heavy integration systems like BizTalk were also a no go.

The result was TeamNet Data File Export, a small c# library that can take data from existing source (DataSet and IList are currently implemented) and write files to different formats (DBF and CSV are currently implemented). The library was designed for extensibility so adding other formats should be pretty easy. Office XML (Excel 2003) support is planned and will probably be added the next days.

The library is very easy to use and has a fluent interface. This is an example of using a data set as data source to export data to a DBF file:

DataFileExport

.CreateDbf(sourceDataSet)

.AddCharacterField("AString", 30)

.AddNumericField("ANumber", 10, 0)

.Write(filePath);

While DBF files are certainly not trilling to work with, there are times when you need them. I hope to find this library handy then.

Comments Off on DBF Data Export Library

Backup Database in SqlServer with Date and Time Information in the File Name

The build-in back-up mechanism of SqlServer allows to either override the back-up file or append to it. I usually prefer to have each back-up in it’s file, this way I can easily choose which older back-ups to delete, and immediately see the last one. The following script makes a back-up with a file name that contains date and time information and can be used in a job step:

declare @currentDate datetime

set @currentDate = GetDate()

declare @fileName varchar(255)

set @fileName = 'C:\BKP\MyDatabase'

+ cast(Year(@currentDate) as varchar(4))

+ Replicate('0', 2 - Len(cast(Month(@currentDate) as varchar(2))))

+ cast(Month(@currentDate) as varchar(2))

+ Replicate('0', 2 - Len(cast(Day(@currentDate) as varchar(2))))

+ cast(Day(@currentDate) as varchar(2))

+ '_' +

+ Replicate('0', 2 - Len(cast(DatePart(hour, @currentDate) as varchar(2))))

+ cast(DatePart(hour, @currentDate) as varchar(2))

+ Replicate('0', 2 - Len(cast(DatePart(minute, @currentDate) as varchar(2))))

+ cast(DatePart(minute, @currentDate) as varchar(2)) + '.bak'

backup database [MyDatabase] to disk = @fileName with NOFORMAT, NOINIT,

name = N'MyDatabase-Full Database Backup',

SKIP, NOREWIND, NOUNLOAD, STATS = 10

The back-up will be written to the file with a name like C:\BKP\MyDatabase_20090505_1346.bak.

Hope it helps.

Comments Off on Handling Enterprise Web Application Sessions